How (and why) to Ramble on your goat sideways

Subnet masks are weird. Or at least, slightly mysterious.

I know what patterns of twos and fives and zeroes need to be typed into the subnet mask field in order to achieve various partitionings of a class c, and I understand the fundamental concept behind the technique, but for some reason it still has a sort of voodoo-ish element about it.

I know what patterns of twos and fives and zeroes need to be typed into the subnet mask field in order to achieve various partitionings of a class c, and I understand the fundamental concept behind the technique, but for some reason it still has a sort of voodoo-ish element about it.

So a "class C" (*) is a /24 is 0xffffff00 is 255.255.255.0, etc. 24 bits of network, 8 bits of host.

(*) Technically, the network classes are an obsolete way of referring to the IPv4 address space partitioning. They mostly map to /8, /16, and /24, but the semantics are a little different in some obscure cases. Since the adoption of CIDR (classless inter-domain routing) in the mid 90s, speaking of a "class C" isn't technically accurate any more.

As for BGP, the protocol itself is actually pretty simple. It's just very generic and configurable, so the complex part is how you set up a network of BGP routers to propagate the routes you want to the places that you want.

--Ian

Boost Pope

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,615

Serious advice, having been in the exact inverse of this situation: Re-evaluate your relationship priorities.

Yes, I'm sure she's hot, good in bed, etc. But those aren't long-term values.

Yes, I'm sure she's hot, good in bed, etc. But those aren't long-term values.

SADFab Destructive Testing Engineer

iTrader: (5)

Join Date: Apr 2014

Location: Beaverton, USA

Posts: 18,642

Total Cats: 1,866

Haha. She's awesome. She's actually gone hunting with me. The time in question was the morning after her 21st birthday. So combine quite hungover with about 6 dead ducks on a string and she wasn't a happy camper.

We've lived together about a year now and I've started the ring shopping process.

We've lived together about a year now and I've started the ring shopping process.

Boost Pope

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,615

Hmmmm. I'm conflicted here.

On the one hand, ducks.

On the other hand, 21 is awful young. I can thing of only one marriage amongst all my friends which survived from such an age. Though, to be fair, that one is probably the strongest of all of them.

On the one hand, ducks.

On the other hand, 21 is awful young. I can thing of only one marriage amongst all my friends which survived from such an age. Though, to be fair, that one is probably the strongest of all of them.

SADFab Destructive Testing Engineer

iTrader: (5)

Join Date: Apr 2014

Location: Beaverton, USA

Posts: 18,642

Total Cats: 1,866

We are now both 23. Been together over 3 years. Oh and she buys me tools for anniversary presents. Goes to track days. And survived a 4 day camping trip to Laguna Seca in a Miata.

I'm pretty happy with her. She even cleans the garage and helps me work on the car sometimes.

She's leaving for nursing school in 6 months. I won't be going with her, because steady engineering job here. Marriage is a few years down the road still. Engagement is a commitment to stay together even though she's leaving for a year.

I'm pretty happy with her. She even cleans the garage and helps me work on the car sometimes.

She's leaving for nursing school in 6 months. I won't be going with her, because steady engineering job here. Marriage is a few years down the road still. Engagement is a commitment to stay together even though she's leaving for a year.

Boost Pope

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,615

ECHO OF THE BUNNYMEN: HOW AMD WON, THEN LOST

by: Brian Benchoff

In 2003, nothing could stop AMD. This was a company that moved from a semiconductor company based around second-sourcing Intel designs in the 1980s to a Fortune 500 company a mere fifteen years later. AMD was on fire, and with almost a 50% market share of desktop CPUs, it was a true challenger to Intelís throne.

AMD began its corporate history like dozens of other semiconductor companies: second sourcing dozens of other designs from dozens of other companies. The first AMD chip, sold in 1970, was just a four-bit shift register. From there, AMD began producing 1024-bit static RAMs, ever more complex integrated circuits, and in 1974 released the Am9080, a reverse-engineered version of the Intel 8080.

AMD had the beginnings of something great. The company was founded by Jerry Sanders, electrical engineer at Fairchild Semiconductor. At the time Sanders left Fairchild in 1969, Gordon Moore and Robert Noyce, also former Fairchild employees, had formed Intel a year before.

While AMD and Intel shared a common heritage, history bears that only one company would become the king of semiconductors. Twenty years after these companies were founded they would find themselves in a bitter rivalry, and thirty years after their beginnings, they would each see their fortunes change. For a short time, AMD would overtake Intel as the king of CPUs, only to stumble again and again to a market share of ten to twenty percent. It only takes excellent engineering to succeed, but how did AMD fail? The answer is Intel. Through illegal practices and ethically questionable engineering decisions, Intel would succeed to be the current leader of the semiconductor world.

The Break From Second Sourcing CPUs

The early to mid 1990s were a strange time for the world of microprocessors and desktop computing. By then, the future was assuredly in Intelís hands. The Amiga with itís Motorola chips had died, Apple had switched over to IBMís PowerPC architecture but still only had a few percent of the home computer market. ARM was just a glimmer in the eye of a few Englishmen, serving as the core of the ridiculed Apple Newton and the brains of the RiscPC. The future of computing was then, like now, completely in the hands of a few unnamed engineers at Intel.

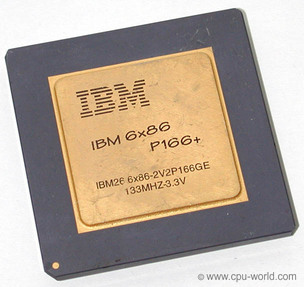

The cream had apparently risen to the top, a standard had been settled upon, and newcomers were quick to glom onto the latest way to print money. In 1995, Cyrix released the 6◊86, a processor that was able Ė in some cases Ė to outperform their Intel counterpart.

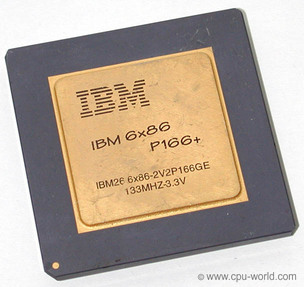

Cyrixís P166+, a CPU faster than an equivalent Intel Pentium clocked at 166MHz.

Although Cyrix had been around for the better part of a decade by 1995, their earlier products were only x87 coprocessors, floating point units and 386 upgrades. The release of the 6◊86 gave Cyrix its first orders from OEMs, landing the chip in low-priced Compaqs, Packard Bells, and eMachines desktops. For tens of thousands of people, their first little bit of Internet would leak through phone lines with the help of a Cyrix CPU.

During this time, AMD also saw explosive growth with their second-sourced Intel chips. An agreement between AMD and Intel penned in 1982 allowed a 10-year technology exchange agreement that gave each company the rights to manufacture products developed by the other. For AMD, this meant cloning 8086 and 286 chips. For Intel, this meant developing the technology behind the chips.

1992 meant an end to this agreement between AMD and Intel. For the previous decade, OEMs gobbled up clones of Intelís 286 processor, giving AMD the resources to develop their own CPUs. The greatest of these was the Am386, a clone of Intelís 386 that was nearly as fast as a 486 while being significantly cheaper.

The Performance of a 486 for your old 386.

Itís All About The Pentiums

AMDís break from second-sourcing with the Am386 presented a problem for the Intel marketing team. The progression from 8086 to the forgotten 80186, the 286, and 486 could not continue. The 80586 needed a name. After what was surely several hundred thousand dollars, a marketing company realized pent- is the Greek prefix for five, and the Pentium was born.

The Pentium was a sea change in the design of CPUs. The 386, 486, and Motorolaís 68k line were relatively simple scalar processors. There was one ALU in these earlier CPUs, one bit shifter, and one multiplier. One of everything, each serving different functions. In effect, these processors arenít much different from CPUs constructed in Minecraft.

Intelís Pentium introduced the first superscalar processor to the masses. In this architecture, multiple ALUs, floating point units, multipliers, and bit shifters existed on the same chip. A decoder would shift data around to different ALUs within the same clock cycle. Itís an architectural precedent for the quad-core processors of today, but completely different. If owning two cars is equivalent of a dual-core processor, putting two engines in the same car would be the equivalent of a superscalar processor.

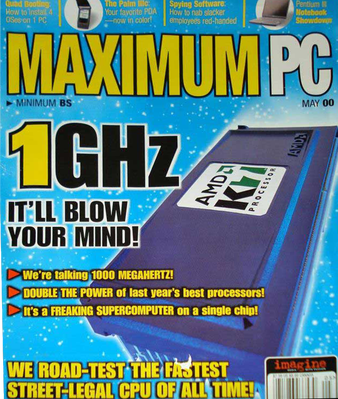

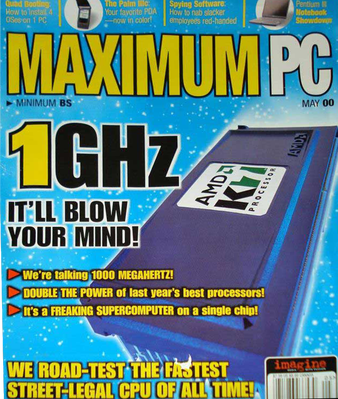

By the late 90s, AMD had rightfully earned a reputation for having processors that were at least as capable as the Intel offerings, while computer magazines beamed with news that AMD would unseat Intel as the best CPU performer. AMD stock grew from $4.25 in 1992 to ten times that in early 2000. In that year, AMD officially won the race to a Gigahertz: it introduced an Athlon CPU that ran at 1000 MHz. In the words of the infamous Maximum PC cover, it was the worldís fastest street legal CPU of all time.

Underhandedness At Intel

Gateway, Dell, Compaq, and every other OEM shipped AMD chips alongside Intel offerings from the year 2000. These AMD chips were competitive even when compared to their equivalent Intel offerings, but on a price/performance ratio, AMD blew them away. Most of the evidence is still with us today; deep in the archives of overclockers.com and tomshardware, the default gaming and high performance CPU of the Bush administration was AMD.

For Intel, this was a problem. The future of CPUs has always been high performance computing, Itís a tautology given to us by unshaken faith in Mooreís law and the inevitable truth that applications will expand to fill all remaining CPU cycles. Were Intel to lose the performance race, it would all be over.

And so, Intel began their underhanded tactics. From 2003 until 2006, Intel paid $1 Billion to Dell in exchange not to ship any computers using CPUs made by AMD. Intel held OEMs ransom, encouraging them to only ship Intel CPUs by way of volume discounts and threatening OEMs with low-priority during CPU shortages. Intel engaged in false advertising, and misrepresenting benchmark results. This came to light after a Federal Trade Commission settlement and an SEC probe disclosed Intel paid Dell up to $1 Billion a year not to use AMD chips.

In addition to those kickbacks Intel paid to OEMs to only sell their particular processors, Intel even sold their CPUs and chipsets for below cost. While no one was genuinely surprised when this was disclosed in the FTC settlement, the news only came in 2010, seven years after Intel started paying off OEMs. One might expect AMD to see an increase in market share after the FTC and SEC sent them through the wringer. This was not the case; since 2007, Intel has held about 70% of the CPU market share, with AMD taking another 25%. Itís what Intel did with their compilers around 2003 that would earn AMD a perpetual silver medal.

The War of the Compilers

Intel does much more than just silicon. Hidden inside their design centers are the keepers of x86, and this includes the people who write the compilers for x86 processors. Building a compiler is incredibly complex work, and with the right amount of optimizations, a compiler can turn code into an enormous executable which is capable of running on the latest Skylake processors to the earliest Pentiums, with code optimized for each and every processor in between.

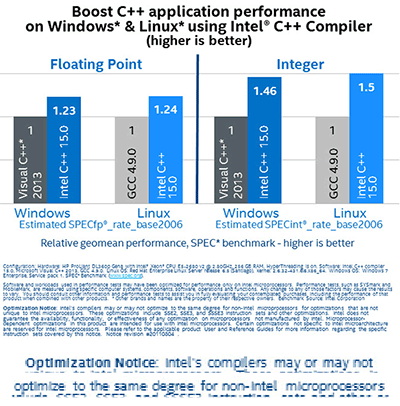

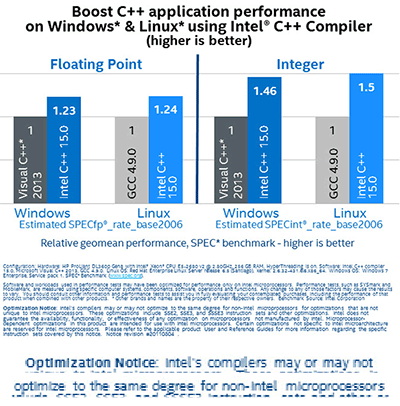

Intelís compilers are the best in their class, provided you use Intel CPUs.

While processors made a decade apart can see major architectural changes and processors made just a few years apart can see changes in the feature size of the chip, in the medium term, chipmakers are always adding instructions. These new instructions – the most famous example by far would be the MMX instructions introduced by Intel Ė provide the chip with new capabilities. With new capabilities come more compiler optimizations, and more complexity in building compilers. This optimization and profiling for different CPUs does far more than the -O3 flag in GCC; there is a reason Intel produces the fastest compilers, and itís entirely due to the optimization and profiling afforded by these compilers.

Beginning in 1999 with the Pentium III, Intel introduced SSE instructions to their processors. This set of about 70 new instructions provided faster calculations for single precision floats. The expansion to this, SSE2, introduced with the Pentium 4 in 2001 greatly expanded floating point calculations and AMD was obliged to include these instructions in their AMD64 CPUs beginning in 2003.

Although both Intel and AMD produced chips that could take full advantage of these new instructions, the official Intel compiler was optimised to only allow Intel CPUs to use these instructions. Because the compiler can make multiple versions of each piece of code optimized for a different processor, itís a simple matter for an Intel compiler to choose not to use improved SSE, SSE2, and SSE3 instructions on non-Intel processors. Simply by checking if the vendor ID of a CPU is ĎGenuineIntelí, the optimized code can be used. If that vendor ID is not found, the slower code is used, even if the CPU supports the faster and more efficient instructions.

This is playing to the benchmarks, and while this trick of the Intel compiler has been known since 2005, it is a surprisingly pernicious way to gain market share.

Weíll be stuck with this for a while. Thatís because all code generated by an Intel compiler exploiting this trick will have a performance hit on non-Intel CPUs, Every application developed with an Intel compiler will always perform worse on non-Intel hardware. Itís not a case of Intel writing a compiler for their chips; Intel is writing a compiler for instructions present in both Intel and AMD offerings, but ignoring the instructions found in AMD CPUs.

The Future of AMD is a Zen Outlook

There are two giants of technology whose products we use every day: Microsoft and Intel. In 1997, Microsoft famously bailed out Apple with a $150 Million investment of non-voting shares. While this was in retrospect a great investment Ė had Microsoft held onto those shares, they would have been worth Billions Ė Microsoftís interest in Apple was never about money. It was about not being a monopoly. As long as Apple had a few percentage points of market share, Microsoft could point to Cupertino and say they are not a monopoly.

And such is Intelís interest in AMD. In the 1980s, it was necessary for Intel to second source their processors. By the early 90s, it was clear that x86 would be the future of desktop computing and having the entire market is the short path to congressional hearings and comparisons to AT&T. The system worked perfectly until AMD started innovating far beyond what Intel could muster. There should be no surprise that Intelís underhandedness began in AMDís salad days, and Intelís practices of selling CPUs at a loss ended once they had taken back the market. Permanently disabling AMD CPUs through compiler optimizations ensured AMD would not quickly retake this market share.

It is in Intelís interest that AMD not die, and for this, AMD must continue innovating. This means grabbing whatever market they can Ė all current-gen consoles from Sony, Microsoft, and Nintendo feature AMD chipsets. This also means AMD must stage their comeback, and in less than a year this new chip, the Zen architecture, will land in our sockets.

In early 2012, the architect of the original Athlon 64 processor returned to AMD to design Zen, AMDís latest architecture and the first that will be made with a 14nm process. Only a few months ago, the tapeout for Zen was completed, and these chips should make it out to the public within a year.

Is this AMDís answer to a decade of deceit from Intel? Yes and no. One would hope Zen and the K12 designs are the beginning of a rebirth that would lead to a true competition not seen since 2004. The product of these developments are yet to be seen, but the market is ready for competition.

Echo of the Bunnymen: How AMD Won, Then Lost | Hackaday

by: Brian Benchoff

In 2003, nothing could stop AMD. This was a company that moved from a semiconductor company based around second-sourcing Intel designs in the 1980s to a Fortune 500 company a mere fifteen years later. AMD was on fire, and with almost a 50% market share of desktop CPUs, it was a true challenger to Intelís throne.

AMD began its corporate history like dozens of other semiconductor companies: second sourcing dozens of other designs from dozens of other companies. The first AMD chip, sold in 1970, was just a four-bit shift register. From there, AMD began producing 1024-bit static RAMs, ever more complex integrated circuits, and in 1974 released the Am9080, a reverse-engineered version of the Intel 8080.

AMD had the beginnings of something great. The company was founded by Jerry Sanders, electrical engineer at Fairchild Semiconductor. At the time Sanders left Fairchild in 1969, Gordon Moore and Robert Noyce, also former Fairchild employees, had formed Intel a year before.

While AMD and Intel shared a common heritage, history bears that only one company would become the king of semiconductors. Twenty years after these companies were founded they would find themselves in a bitter rivalry, and thirty years after their beginnings, they would each see their fortunes change. For a short time, AMD would overtake Intel as the king of CPUs, only to stumble again and again to a market share of ten to twenty percent. It only takes excellent engineering to succeed, but how did AMD fail? The answer is Intel. Through illegal practices and ethically questionable engineering decisions, Intel would succeed to be the current leader of the semiconductor world.

The Break From Second Sourcing CPUs

The early to mid 1990s were a strange time for the world of microprocessors and desktop computing. By then, the future was assuredly in Intelís hands. The Amiga with itís Motorola chips had died, Apple had switched over to IBMís PowerPC architecture but still only had a few percent of the home computer market. ARM was just a glimmer in the eye of a few Englishmen, serving as the core of the ridiculed Apple Newton and the brains of the RiscPC. The future of computing was then, like now, completely in the hands of a few unnamed engineers at Intel.

The cream had apparently risen to the top, a standard had been settled upon, and newcomers were quick to glom onto the latest way to print money. In 1995, Cyrix released the 6◊86, a processor that was able Ė in some cases Ė to outperform their Intel counterpart.

Cyrixís P166+, a CPU faster than an equivalent Intel Pentium clocked at 166MHz.

Although Cyrix had been around for the better part of a decade by 1995, their earlier products were only x87 coprocessors, floating point units and 386 upgrades. The release of the 6◊86 gave Cyrix its first orders from OEMs, landing the chip in low-priced Compaqs, Packard Bells, and eMachines desktops. For tens of thousands of people, their first little bit of Internet would leak through phone lines with the help of a Cyrix CPU.

During this time, AMD also saw explosive growth with their second-sourced Intel chips. An agreement between AMD and Intel penned in 1982 allowed a 10-year technology exchange agreement that gave each company the rights to manufacture products developed by the other. For AMD, this meant cloning 8086 and 286 chips. For Intel, this meant developing the technology behind the chips.

1992 meant an end to this agreement between AMD and Intel. For the previous decade, OEMs gobbled up clones of Intelís 286 processor, giving AMD the resources to develop their own CPUs. The greatest of these was the Am386, a clone of Intelís 386 that was nearly as fast as a 486 while being significantly cheaper.

The Performance of a 486 for your old 386.

Itís All About The Pentiums

AMDís break from second-sourcing with the Am386 presented a problem for the Intel marketing team. The progression from 8086 to the forgotten 80186, the 286, and 486 could not continue. The 80586 needed a name. After what was surely several hundred thousand dollars, a marketing company realized pent- is the Greek prefix for five, and the Pentium was born.

The Pentium was a sea change in the design of CPUs. The 386, 486, and Motorolaís 68k line were relatively simple scalar processors. There was one ALU in these earlier CPUs, one bit shifter, and one multiplier. One of everything, each serving different functions. In effect, these processors arenít much different from CPUs constructed in Minecraft.

Intelís Pentium introduced the first superscalar processor to the masses. In this architecture, multiple ALUs, floating point units, multipliers, and bit shifters existed on the same chip. A decoder would shift data around to different ALUs within the same clock cycle. Itís an architectural precedent for the quad-core processors of today, but completely different. If owning two cars is equivalent of a dual-core processor, putting two engines in the same car would be the equivalent of a superscalar processor.

By the late 90s, AMD had rightfully earned a reputation for having processors that were at least as capable as the Intel offerings, while computer magazines beamed with news that AMD would unseat Intel as the best CPU performer. AMD stock grew from $4.25 in 1992 to ten times that in early 2000. In that year, AMD officially won the race to a Gigahertz: it introduced an Athlon CPU that ran at 1000 MHz. In the words of the infamous Maximum PC cover, it was the worldís fastest street legal CPU of all time.

Underhandedness At Intel

Gateway, Dell, Compaq, and every other OEM shipped AMD chips alongside Intel offerings from the year 2000. These AMD chips were competitive even when compared to their equivalent Intel offerings, but on a price/performance ratio, AMD blew them away. Most of the evidence is still with us today; deep in the archives of overclockers.com and tomshardware, the default gaming and high performance CPU of the Bush administration was AMD.

For Intel, this was a problem. The future of CPUs has always been high performance computing, Itís a tautology given to us by unshaken faith in Mooreís law and the inevitable truth that applications will expand to fill all remaining CPU cycles. Were Intel to lose the performance race, it would all be over.

And so, Intel began their underhanded tactics. From 2003 until 2006, Intel paid $1 Billion to Dell in exchange not to ship any computers using CPUs made by AMD. Intel held OEMs ransom, encouraging them to only ship Intel CPUs by way of volume discounts and threatening OEMs with low-priority during CPU shortages. Intel engaged in false advertising, and misrepresenting benchmark results. This came to light after a Federal Trade Commission settlement and an SEC probe disclosed Intel paid Dell up to $1 Billion a year not to use AMD chips.

In addition to those kickbacks Intel paid to OEMs to only sell their particular processors, Intel even sold their CPUs and chipsets for below cost. While no one was genuinely surprised when this was disclosed in the FTC settlement, the news only came in 2010, seven years after Intel started paying off OEMs. One might expect AMD to see an increase in market share after the FTC and SEC sent them through the wringer. This was not the case; since 2007, Intel has held about 70% of the CPU market share, with AMD taking another 25%. Itís what Intel did with their compilers around 2003 that would earn AMD a perpetual silver medal.

The War of the Compilers

Intel does much more than just silicon. Hidden inside their design centers are the keepers of x86, and this includes the people who write the compilers for x86 processors. Building a compiler is incredibly complex work, and with the right amount of optimizations, a compiler can turn code into an enormous executable which is capable of running on the latest Skylake processors to the earliest Pentiums, with code optimized for each and every processor in between.

Intelís compilers are the best in their class, provided you use Intel CPUs.

While processors made a decade apart can see major architectural changes and processors made just a few years apart can see changes in the feature size of the chip, in the medium term, chipmakers are always adding instructions. These new instructions – the most famous example by far would be the MMX instructions introduced by Intel Ė provide the chip with new capabilities. With new capabilities come more compiler optimizations, and more complexity in building compilers. This optimization and profiling for different CPUs does far more than the -O3 flag in GCC; there is a reason Intel produces the fastest compilers, and itís entirely due to the optimization and profiling afforded by these compilers.

Beginning in 1999 with the Pentium III, Intel introduced SSE instructions to their processors. This set of about 70 new instructions provided faster calculations for single precision floats. The expansion to this, SSE2, introduced with the Pentium 4 in 2001 greatly expanded floating point calculations and AMD was obliged to include these instructions in their AMD64 CPUs beginning in 2003.

Although both Intel and AMD produced chips that could take full advantage of these new instructions, the official Intel compiler was optimised to only allow Intel CPUs to use these instructions. Because the compiler can make multiple versions of each piece of code optimized for a different processor, itís a simple matter for an Intel compiler to choose not to use improved SSE, SSE2, and SSE3 instructions on non-Intel processors. Simply by checking if the vendor ID of a CPU is ĎGenuineIntelí, the optimized code can be used. If that vendor ID is not found, the slower code is used, even if the CPU supports the faster and more efficient instructions.

This is playing to the benchmarks, and while this trick of the Intel compiler has been known since 2005, it is a surprisingly pernicious way to gain market share.

Weíll be stuck with this for a while. Thatís because all code generated by an Intel compiler exploiting this trick will have a performance hit on non-Intel CPUs, Every application developed with an Intel compiler will always perform worse on non-Intel hardware. Itís not a case of Intel writing a compiler for their chips; Intel is writing a compiler for instructions present in both Intel and AMD offerings, but ignoring the instructions found in AMD CPUs.

The Future of AMD is a Zen Outlook

There are two giants of technology whose products we use every day: Microsoft and Intel. In 1997, Microsoft famously bailed out Apple with a $150 Million investment of non-voting shares. While this was in retrospect a great investment Ė had Microsoft held onto those shares, they would have been worth Billions Ė Microsoftís interest in Apple was never about money. It was about not being a monopoly. As long as Apple had a few percentage points of market share, Microsoft could point to Cupertino and say they are not a monopoly.

And such is Intelís interest in AMD. In the 1980s, it was necessary for Intel to second source their processors. By the early 90s, it was clear that x86 would be the future of desktop computing and having the entire market is the short path to congressional hearings and comparisons to AT&T. The system worked perfectly until AMD started innovating far beyond what Intel could muster. There should be no surprise that Intelís underhandedness began in AMDís salad days, and Intelís practices of selling CPUs at a loss ended once they had taken back the market. Permanently disabling AMD CPUs through compiler optimizations ensured AMD would not quickly retake this market share.

It is in Intelís interest that AMD not die, and for this, AMD must continue innovating. This means grabbing whatever market they can Ė all current-gen consoles from Sony, Microsoft, and Nintendo feature AMD chipsets. This also means AMD must stage their comeback, and in less than a year this new chip, the Zen architecture, will land in our sockets.

In early 2012, the architect of the original Athlon 64 processor returned to AMD to design Zen, AMDís latest architecture and the first that will be made with a 14nm process. Only a few months ago, the tapeout for Zen was completed, and these chips should make it out to the public within a year.

Is this AMDís answer to a decade of deceit from Intel? Yes and no. One would hope Zen and the K12 designs are the beginning of a rebirth that would lead to a true competition not seen since 2004. The product of these developments are yet to be seen, but the market is ready for competition.

Echo of the Bunnymen: How AMD Won, Then Lost | Hackaday

Elite Member

iTrader: (37)

Join Date: Apr 2010

Location: Very NorCal

Posts: 10,441

Total Cats: 1,899

I think owned the IBM branded version of that Cyrix 6x86 P166+ chip,

I bought it while all my monied friends were doing builds using genuine Intel P120 and P133 chips. Socket 5/Socket 7 was a glorious time to be building gaming systems, a time when you could see an actual, significant increase in performance with a CPU upgrade while all other parts in the system remained the same.

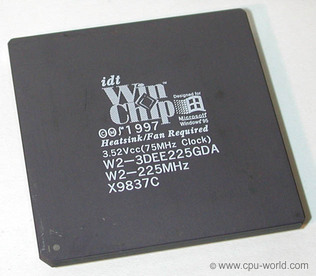

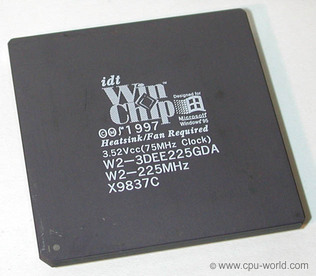

I also owned one of these bad boys:

I was the first one of my friends to have a system over 200MHz. I only discovered after I bought it that MHz was not everything. It was quite the arms race

I bought it while all my monied friends were doing builds using genuine Intel P120 and P133 chips. Socket 5/Socket 7 was a glorious time to be building gaming systems, a time when you could see an actual, significant increase in performance with a CPU upgrade while all other parts in the system remained the same.

I also owned one of these bad boys:

I was the first one of my friends to have a system over 200MHz. I only discovered after I bought it that MHz was not everything. It was quite the arms race

So i'm getting annoyed with replacing batteries on my electric drills and ****. Few years ago i bought drills and an impact wrench and **** that i've used for 2-3 jobs total and the batteries are shot from sitting. **** replacing all the batteries since they are all different sizes.

I plan on hot wiring the accessories so the handle has a plug that all accessories share and want to use the same battery with a wire.

That said recommend me an 18 volt battery that will work for the job. Normal hand drill batteries are ~ 2000mah right? So for a separate battery maybe 10k is plenty?

Something half as big as this but 18 volt.

https://www.amazon.com/VMAX600-Batte...+cycle+battery

I plan on hot wiring the accessories so the handle has a plug that all accessories share and want to use the same battery with a wire.

That said recommend me an 18 volt battery that will work for the job. Normal hand drill batteries are ~ 2000mah right? So for a separate battery maybe 10k is plenty?

Something half as big as this but 18 volt.

https://www.amazon.com/VMAX600-Batte...+cycle+battery

Elite Member

iTrader: (5)

Join Date: Oct 2011

Location: Detroit (the part with no rules or laws)

Posts: 5,677

Total Cats: 800

Li-ion batteries usually don't come back because of the chargers. Once they drain down to a certain point these "intelligent" chargers have a function that won't allow them to charge again.

You can in fact charge them manually if you have a power supply. Damn Makitas at work die all the time when people run the tools way down. I have my own way of doing it, other people have theirs.

There are youtube videos.

Probably many more.

Once you bring the voltage back up, the charger will know it isn't "dead" anymore and charge it again.

You can in fact charge them manually if you have a power supply. Damn Makitas at work die all the time when people run the tools way down. I have my own way of doing it, other people have theirs.

There are youtube videos.

Probably many more.

Once you bring the voltage back up, the charger will know it isn't "dead" anymore and charge it again.

"Storing" rechargeable batteries for a few years pretty much guarantees their demise. There's a reason "battery tender" makes a killing in the late fall when all the boys are putting away their summer toys and remember how much their new batteries cost them the preceeding spring. Liquid electrolyte batteries discharge enough to eventually freeze and kill themselves, and lithium based batteries undergo irreversible cell damage once the charge falls below a certain voltage.

My personal drill is a Rigid 18v lithium drill. I did construction work (glazing) for a year and a half with it where it was my primary tool. It came with the compact batteries that would get me through a full day of work, and the fast charger put a full charge into them in 20 minutes. That was back in 2006-2007. In 2014, both batteries bit the dust a couple months apart; Since I wasn't doing construction any longer, I bought a single full-size battery to replace them. I still use the drill probably once a month, and I charge it when it dies - about once every 9 or 10 months probably. Been a solid tool for a decade now.

My personal drill is a Rigid 18v lithium drill. I did construction work (glazing) for a year and a half with it where it was my primary tool. It came with the compact batteries that would get me through a full day of work, and the fast charger put a full charge into them in 20 minutes. That was back in 2006-2007. In 2014, both batteries bit the dust a couple months apart; Since I wasn't doing construction any longer, I bought a single full-size battery to replace them. I still use the drill probably once a month, and I charge it when it dies - about once every 9 or 10 months probably. Been a solid tool for a decade now.

Boost Pope

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,615

Also, there's a reason why the BMSes in modern lithium packs are designed to shut the battery down entirely (and disallow recharging) once it discharges below a certain level. When the battery is drained below its rated minimum voltage, the anode terminal (which is typically copper) begins to dissolve into the electrolyte. If the battery is subsequently re-charged, then the copper will precipitate back into metallic form, and if a large enough amount of it does this all in the same place, it can cause an internal short-circuit leading to a runaway thermal failure (fire / explosion).

It's not common, but it happens enough that companies spend the extra money to install safety-cutoff circuits to prevent it.

Also, the degradation rate of lithium-ion batteries is actually higher at 100% SOC than at around 60% SOC. They still self-terminate over time, but you might get an extra six months out of it if you don't shelf it while completely full.

It's not common, but it happens enough that companies spend the extra money to install safety-cutoff circuits to prevent it.

Also, the degradation rate of lithium-ion batteries is actually higher at 100% SOC than at around 60% SOC. They still self-terminate over time, but you might get an extra six months out of it if you don't shelf it while completely full.