Yet another video card thread

#41

Boost Czar

iTrader: (62)

Join Date: May 2005

Location: Chantilly, VA

Posts: 79,501

Total Cats: 4,080

WHOA WHOA WHOA WHOA.

Father once spoke of an angel, I used to dream he'd appear. Here in the this forum he calls me softly, somwhere inside, hiding. Somehow I know he's always with me. He, the unseen genious.

Grant me your glory! Angel of GPUs, hide no longer! Secret, strange, Angel.

#42

Woah, I just looked up the GTX 280. That's a great card to pick up for free. I thought I had done well when I snagged an 8800GTS for $40.

GeForce 8800 GTS (G92) vs GeForce GTX 280 – Performance Comparison Benchmarks @ Hardware Compare

GeForce 8800 GTS (G92) vs GeForce GTX 280 – Performance Comparison Benchmarks @ Hardware Compare

#43

Boost Pope

Thread Starter

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,608

Ironically, I feel even more like this guy than ever now:

I'm sure I probably won't be able to run Call of Duty XVI (or whatever they're up to now) at 1920x1200 in the highest quality on this card, but should I need to, I can just down some of the quality settings. I honestly don't care whether or not I can see the entire world ray-traced to a depth of 16 intersections reflecting off of every single drop of blood sprayed on a wall. That does not significantly enhance gameplay.

I still cannot get over how utterly fluid the display is now. When I first fired it up, I was actually sort of repulsed by it; kind of like how all the high-end LED/LED TVs which support upconversion to 600 Hz refresh rates try to do all this fancy motion interpolation which winds up making movies look like ESPN-HD football games. In that context it's horribly off-putting, but the benefits in this context are obvious and massive.

I guess we'll wait and see. Maybe in 3 or 4 years I'll have to upgrade to a 3 or 4 year old card that supports DX11.

I won't claim to be an expert here, but I did a bit of light searching and found a couple of facts.

According to the Valve Developer's Guide, Source Engine games typically communicate with the server at a rate of only 20-30 packets per second. (source.)

Further this source suggests that for the default network config in TF2, the maximum effective datarate is 12.2 KBps, which again is exceedingly trivial.

I'm not saying that the overhead here is zero. But the load should be so tiny that its effect on rendered framerate should be almost immeasurable- certainly not 10% under normal circumstances.

Thraddax. My avatar is the Giant Chicken.

But I appreciate it.

It does seem to consume some power. This is to be expected, given the fact that it has 240 processor cores scattered across a die the size of Nevada, with more transistors than there are people in China. (That part really freaks me out. 1.4 billion is an extremely large number of anything, much less things that fit into a box under my desk.)

Stupidly, I failed to benchmark my system's power draw under actual gaming load with the previous card (the maximum TDP for the GT210 is a paltry 31 watts.) I did, however, benchmark the system's total idle load at 45-50 watts as measured on the AC side by the UPS. With the new card, idle load has jumped to 95-110 watts, which is a staggering increase. I'm really quite surprised by this, as I would have assumed that the vast majority of the card would simply power itself off when not in use, but this does not seem to be the case.

It is reasonably quiet. It is audible at idle, but only just barely. (It should be noted that the rest of the system is almost totally silent- nothing but 120mm fans, and one of the two mechanical hard drives is normally spun down except for the nightly backup operation.) During gameplay the fan speeds up a bit, though it's not noticeable with headphones on. Certainly nothing at all like the "screaming jet engine" that some users bitched about in the reviews.

#44

Boost Czar

iTrader: (62)

Join Date: May 2005

Location: Chantilly, VA

Posts: 79,501

Total Cats: 4,080

Angel, I hear you. Speak, I listen. Stay by my side. Guide me. Angel, my soul was weak; forgive me. Enter at last, Master.

Angel of GPUs, guide and guardian, grant to me your glory. Angel of GPUs, hide no longer. Come to me, strange Angel.

seriously.........

#45

Yeah, I understand the underlying concept of parking the TCP/IP stack in software on the main CPU vs. offloading it to a dedicated co-processor. I just question how it's even possible to code one so badly that it consumes enough system resources to cause a 10% reduction in the video framerate. In relative terms, the amount of computational power required to service an ethernet port is trivial, particularly given the fact that the actual network datarates involved in online game play are surprisingly small.

It is very easy to code one so badly.

AnandTech - BigFoot Networks Killer NIC: Killer Marketing or Killer Product? As you can see, even Anandtech extensively tested this. It baffled them equally as much as me or you how they achieved their results, but they did achieve repeatable, benchmarkable results, and these cards had the snot reviewed out of them across dozens if not hundreds of reviews.

They still aren't worth the $70-$100 they are asking for the cards to me, mind you, but the basic idea is factual and testable in nature.

#46

this stuff is contagious. so brain's angel appeared before me (she told me to tell brain she's sorry) and offered me 2 gtx 480's. i don't actually have any working x86 desktop hardware at home right now, everything broke lol. is it worth buying to support 2 video card or is one fine?

if 2 video card, do both slots need to be x16 or can make due with 2 x8's?

if 2 video card, do both slots need to be x16 or can make due with 2 x8's?

#47

Boost Pope

Thread Starter

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,608

Your angel is giving away GTX 480s? Sheesh- my angel needs to get with the program.

Given how much more highly the GTX 480 scores than the GTX 280 in the benchmarks, I can't imagine needing two of them. But that's just me, of course.

I don't know a lot about these fancy cards, but I do know one thing. A card with an x16 edge connector will not physically fit into an x8 slot. I suppose that if FaeFlora owned the cards in question he could figure out a way to make them fit into x8 slots, however this might have a negative impact on their performance.

Given how much more highly the GTX 480 scores than the GTX 280 in the benchmarks, I can't imagine needing two of them. But that's just me, of course.

I don't know a lot about these fancy cards, but I do know one thing. A card with an x16 edge connector will not physically fit into an x8 slot. I suppose that if FaeFlora owned the cards in question he could figure out a way to make them fit into x8 slots, however this might have a negative impact on their performance.

Last edited by Joe Perez; 07-17-2012 at 12:05 PM. Reason: Added FaeFae joke.

#48

Do not bother with getting two video cards to play nicely. Just get the fastest single card you can afford(receive). I've dual gpu setups from both card manufacturers and have never ever been happy with them. Between waiting for driver updates for the latest games, microstuttering, and flickering in all source gamers, I wont be touching a dual setup until they have made significant advances in compatibility, which will be nevar.

#49

You can get an ATI motherboard with dual x16 support, but Intel architecture doesn't support dual x16 slots. You will have to put one card in an x16 slot, and the second card in a x8 slot. The x8 slot will either be "open" at the back, allowing the remaining connectors for the x16 slot to freeball, or else the x8 slot will physically be x16 in size, while having internal connectors to only support x8 mode.

I run a single video card now, while I used to run dual cards. I never noticed the dual-card "micro-freezing" that some people are bothered by, but it sounds like they've made pretty significant improvements in the last 18-24 months on the micro-freezing

I run a single video card now, while I used to run dual cards. I never noticed the dual-card "micro-freezing" that some people are bothered by, but it sounds like they've made pretty significant improvements in the last 18-24 months on the micro-freezing

#50

Boost Pope

Thread Starter

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,608

I'm looking at some IA boards on Newegg that have up to eight physical x16 slots, which seem to be configurable in a variety of ways to allocate channel-capacity as needed. A few can only drive one slot at x16 and the other at x8 (or drop both to x8 when two cards are installed), however others claim to be able to support two x16 cards at full bandwidth.

Examples:

Newegg.com - ASRock X79 Extreme4 LGA 2011 Intel X79 SATA 6Gb/s USB 3.0 ATX Intel Motherboard

Newegg.com - ASUS P9X79 DELUXE LGA 2011 Intel X79 SATA 6Gb/s USB 3.0 ATX Intel Motherboard with UEFI BIOS

I'd still agree that dual cards are probably more trouble than they're worth.

#53

Boost Pope

Thread Starter

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,608

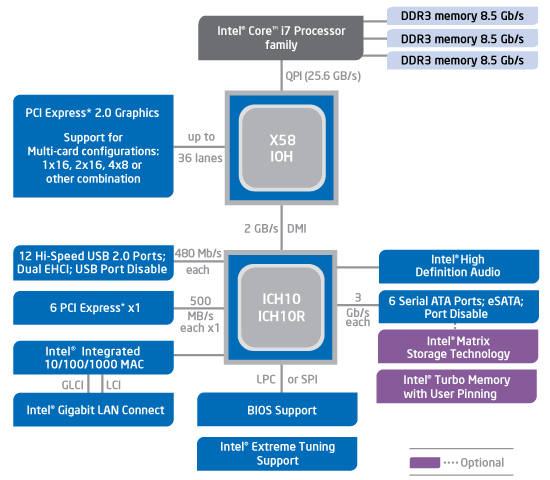

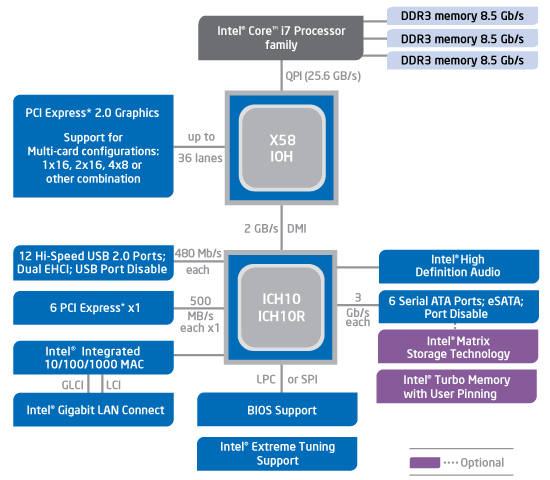

Also, some clarification on the issue of PCIe 8x vs 16x, etc., as I was a little unclear on this, and have done some learning.

There are four different physical standards for PCIe:

Mechanically, the slots are downwards-compatible, meaning that you can plug a 1x card into a x4 slot, for instance. But you cannot plug a x16 card into a x8 slot (or similar) as it just won't physically fit without using a hacksaw.

What I didn't realize was that the PCIe standard also allows dynamic allocation of bus resources, such that a x16 card plugged into a x16 slot might actually be set up to run at x8 speed (or more properly, x8 width.) Certain older IA MoBo chipsets didn't support running two x16 cards at full-width, so commonly boards of this architecture with two x16 slots would turn them both down to x8 when two cards were installing, splitting the bandwidth in half.

So that would be a case where you could claim to have an x16 card installed in an x8 slot- technically it's an x16 slot running at half-capacity, not a physical x8 slot. Not as a horrible as it sounds, given that the quantity of data passing across the PCIe bus to the graphics cards pales in comparison to the amount of data being generated on the card itself, between the on-board memory and the GPU.

The newer stuff, however, has more bandwidth available to service graphics cards, and can thus support 2 cards operating at x16, four cards at x8, etc:

There are four different physical standards for PCIe:

Mechanically, the slots are downwards-compatible, meaning that you can plug a 1x card into a x4 slot, for instance. But you cannot plug a x16 card into a x8 slot (or similar) as it just won't physically fit without using a hacksaw.

What I didn't realize was that the PCIe standard also allows dynamic allocation of bus resources, such that a x16 card plugged into a x16 slot might actually be set up to run at x8 speed (or more properly, x8 width.) Certain older IA MoBo chipsets didn't support running two x16 cards at full-width, so commonly boards of this architecture with two x16 slots would turn them both down to x8 when two cards were installing, splitting the bandwidth in half.

So that would be a case where you could claim to have an x16 card installed in an x8 slot- technically it's an x16 slot running at half-capacity, not a physical x8 slot. Not as a horrible as it sounds, given that the quantity of data passing across the PCIe bus to the graphics cards pales in comparison to the amount of data being generated on the card itself, between the on-board memory and the GPU.

The newer stuff, however, has more bandwidth available to service graphics cards, and can thus support 2 cards operating at x16, four cards at x8, etc:

#55

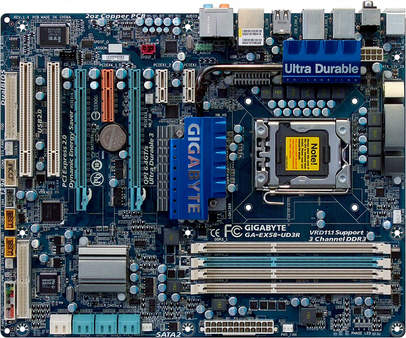

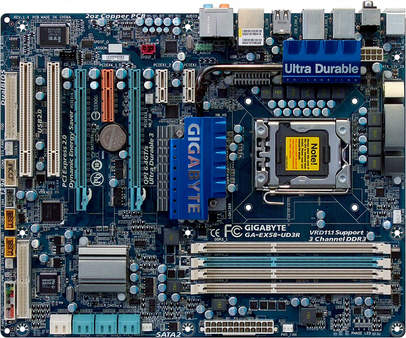

Example of a pci-e x4 slot designed to accomodate an x8 or x16 capable card:

review found here:

Gigabyte EX58-UD3R : X58 On A Budget: Seven Sub-$200 Core i7 Boards

review found here:

Gigabyte EX58-UD3R : X58 On A Budget: Seven Sub-$200 Core i7 Boards

#56

The real-time clock battery unfortunately prevents insertion of anything longer than an x4 card in that open-ended slot.

Sadly you cant just stick any card you want. The card has to be compatible with x1 or x4(most likely X1 which is the native speed for open ended slots I read for compatability reasons) if its an x8 or x16.

#58

Boost Czar

iTrader: (62)

Join Date: May 2005

Location: Chantilly, VA

Posts: 79,501

Total Cats: 4,080

this stuff is contagious. so brain's angel appeared before me (she told me to tell brain she's sorry) and offered me 2 gtx 480's. i don't actually have any working x86 desktop hardware at home right now, everything broke lol. is it worth buying to support 2 video card or is one fine?

if 2 video card, do both slots need to be x16 or can make due with 2 x8's?

if 2 video card, do both slots need to be x16 or can make due with 2 x8's?

WTF. MY ANGEL HAS YET TO APPEAR BEFORE EVEN ME!!!!!!!!!!!! ---- MY LIFE.

#59

Boost Pope

Thread Starter

iTrader: (8)

Join Date: Sep 2005

Location: Chicago. (The less-murder part.)

Posts: 33,050

Total Cats: 6,608

Funny story:

In the 80s, there were dozens of different standards for external peripherals such as keyboards, mice, printers, plotters, scanners, floppy drives, CD-ROMs, hard drives, tape drives, modems, audio analyzers, ground-penetrating radar arrays, etc.

Now, in the 10s, we have USB- a single universal standard by which all peripherals may connect to all computers.

In the 80s, there was ISA, a single universal standard by which all internal expansion cards could plug into to all x86-class machines.

Now, in the 10s, I'm not even sure how many expansion busses (and operating modes thereof) we have. There's AGP (four different versions), PCI (3.3 and 5v), Mini-PCI (Type I, II and III) PCI-X, PCI-e (1x, 4x, 8x, 16x, plus half-height versions to fit into small-form-factor PCs), Mini PCI-e, ExpressCard (two different sizes) and we still occasionally bump into MCA every now and then (both 16 and 32 bit).

In the 80s, there were dozens of different standards for external peripherals such as keyboards, mice, printers, plotters, scanners, floppy drives, CD-ROMs, hard drives, tape drives, modems, audio analyzers, ground-penetrating radar arrays, etc.

Now, in the 10s, we have USB- a single universal standard by which all peripherals may connect to all computers.

In the 80s, there was ISA, a single universal standard by which all internal expansion cards could plug into to all x86-class machines.

Now, in the 10s, I'm not even sure how many expansion busses (and operating modes thereof) we have. There's AGP (four different versions), PCI (3.3 and 5v), Mini-PCI (Type I, II and III) PCI-X, PCI-e (1x, 4x, 8x, 16x, plus half-height versions to fit into small-form-factor PCs), Mini PCI-e, ExpressCard (two different sizes) and we still occasionally bump into MCA every now and then (both 16 and 32 bit).

Last edited by Joe Perez; 07-17-2012 at 07:29 PM. Reason: mice, not mics.